Note: The article assumes a working knowledge of the Csound music programming language API and Apple’s Core Audio framework. If you are not yet familiar with those, I would strongly suggest to first have a look at csounds.com as well as Core Audio documentation.

Intro

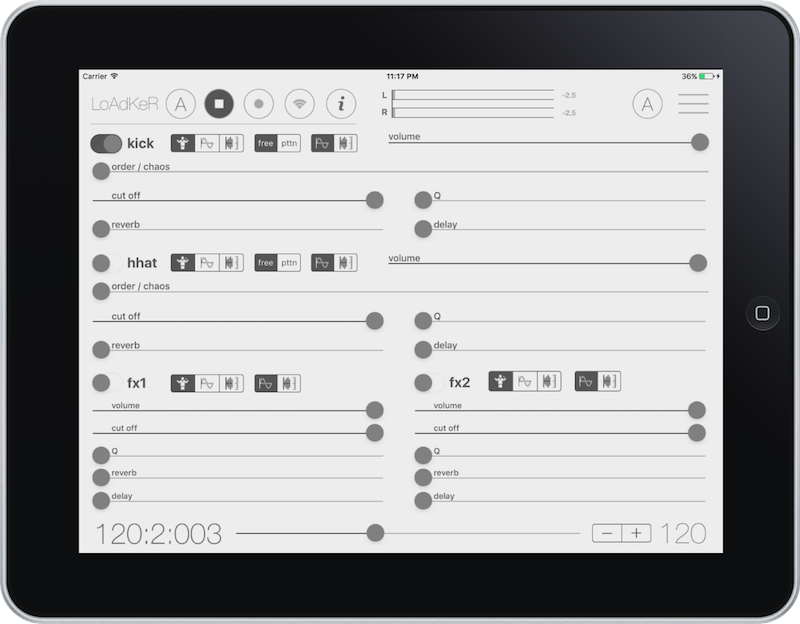

So, let’s suppose that we are developing an iOS music app that creates a stream of music events (a sequencer for example) and renders them into sound. An obvious question is, how do we handle the rendering process so that the resulting audio is in sync with our events? In the following example I will show a way based on the Csound API and the render callback function of Core Audio.

Unfortunately, iOS is not a real-time operating system so most of its timing mechanisms are not accurate or reliable enough to satisfy the tight constraints of real-time digital audio (typically with tolerances of 5ms or lower).

The current popular solution is to move the event scheduling inside the audio thread and, more specifically, the render callback function that is attached to an Audio Unit:

OSStatus renderCallback(void *inRefCon,

AudioUnitRenderActionFlags *ioActionFlags, const AudioTimeStamp *inTimeStamp, UInt32 dump,

UInt32 inNumberFrames,

AudioBufferList *ioData)

There are two reasons for that:

- The render callback provides us with timing information that we can use for our own scheduling

- The audio thread has high priority so the timing information is consistent and reliable

Note however that there are a couple of things that apply when using the audio thread. Objective-C or Swift code, locks (with the exception of try locks), as well as various blocking operations such as disk I/O, network etc. should generally be avoided, since they can delay performance and subsequently lead to the dreaded clicks and pops that can ruin user experience. Michael Tyson, the creator of AudioBus, has written an excellent post on the subject.

So, how do we use the render callback function to schedule and render our events? That can be done in four steps:

- Step 1: extract time information from the callback function

- Step 2: scan through the music events list and find those that are scheduled to start playing during the duration of the current audio buffer

- Step 3: convert these events to Csound score and feed it to the score interpreter

- Step 4: render the resulting Csound score into audio and copy the result into the output buffers

Step 1

As stated above, the callback contains time information (called timestamp) that indicate when the specific audio buffer will actually reach the audio output. That information is stored in

inTimestamp->mHostTime

as machine-specific host time,

UInt64 mHostTime;

so we need to convert it into seconds.

Float64 timeAtBeginning = convertHostTimeToSeconds(inTimestamp->mHostTime);

Next we calculate the buffer duration through the following formula:

bufferDuration = inNumberFrames / sampleRate;

With these data in hand, we can now proceed to step 2.

Step 2

The next step is pretty straightforward, we scan through our events list and we find those that fall (meaning they should start rendering) between timeAtBeginning and timeAtBeginning + bufferDuration.

The question here is, how do we access that list since Obj-C or Swift messages/calls are not encouraged inside the render callback? Fortunately the callback provides us with a generic pointer that we can assign to our data, e.g. a C struct.

MyData *myData = (MyData *) inRefCon;

After finding the events it is then time to move to:

Step 3

Translate the events into Csound score: Csound contains a powerful and flexible score interpreter that can receive both pre-written as well as ‘real-time’ events as text, which then ‘performs’ (renders into audio).

The way that this ‘real-time’ event scheduling work is the following: The reference score time for new events between every render call is 0. Thus, a score event that starts at 0 will start rendering immediately with the next call. On the other hand, an event that starts at x seconds will start rendering after x seconds from the moment that has reached the score interpreter.

That feature allows us to schedule our events with great precision. Let’s assume, for example, that the time at the buffer’s beginning is:

timeAtBeginning = 53.75231 seconds

and one our events starts at

timeOfEvent = 52.753 seconds

Then, we simply calculate the difference between them and we use the difference as the event’s Csound score start time:

Float64 eventStartTime = timeOfEvent - timeAtBeginning;

We can then send the score to the interpreter by using one of the available API functions, for example csoundReadScore().

Step 4

The final step is to call one of Csound’s render functions csoundPerformKsmps() or csoundPerformBuffer() and copy the result to the audio buffers. Again, it is important to note that if you have any new events to render, you have to send them to the score interpreter BEFORE calling any rendering function!

Conclusion

This is one of the many possible ways to synchronise the rendering of music events in iOS and, for me, one that offers a good degree of timing accuracy that is crucial, especially when your app needs to synchronise with others (Ableton Link anyone?).

If you want to dive further into Csound in iOS you can check out the Csound for iOS implementation, developed by Steven Yi, Victor Lazzarini and Aurelius Prochazka. It is an excellent starting point and would definitely help you to get an idea of how to integrate it to your iOS project.

Thanks for reading!